Artificial Intelligence (AI) is a hot topic! Many companies are currently considering how they can use AI to improve their businesses, new CXO titles such as Chief Data Officer and Data Privacy Officer is starting to see use. Furthermore, people start to consider what their data is being used for and want to know how AI will affect their daily lives.

Definitions

Before we can talk about ethics we need to define and understand what AI is and how it relates to Machine Learning. In neurospace, we use following definition for defining Artificial Intelligence:

“The theory and development of making a computer system perform a certain task that normally requires human intelligence” Dictionary

More specific, we define human intelligence as a characteristic such as thinking, and working like humans.

Additionally, we use the definition of Arthur Samuel, 1959 to describe machine learning:

“Machine learning is a field of study that gives computers the ability to learn without being programmed”

Namely that we instead of programming, use data to create machine learning models which can describe parts of the world.

What is AI?

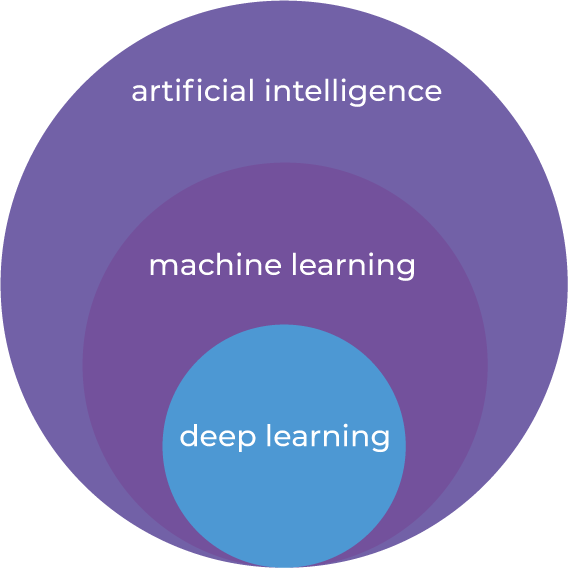

To understand how AI and ML are connected, and how they are not necessarily connected, we should take a look into the history. It was not until around 1950’s that the connection between human intelligence and machines were given [1]. At this time, technology made it difficult to create artificial intelligence. Not until approximately 20 years later, machine learning was defined. In 1970, the technological revolution was still not ready to run machine learning fast, and the memory in computers was limited [1] [2]. Deep neural networks or neural networks are a specific algorithm within machine learning. Machine learning is one way to create artificial intelligence. However, it is possible to make artificial intelligence without machine learning, as well as it is possible to make machine learning models without being artificial intelligence.

Connection between AI, ML and Deep Learning

Within machine learning, there exist a specific branch for learning called reinforcement learning. When using reinforcement learning, the machine learning model is created through learning of past behavior. In neurospace, we often compare this learning method as how kids learn to walk. At first it looks imbalanced, and they might fall a few times. However, every fall is a learning for what not to do, and every step is a learning for what to do. That is how reinforcement learning works as well! Reinforcement learning is triggered by rewards. When something is right, the model has been programmed to “get a reward”, and learn to do the same “movement” over and over again. Every time it fails, it learns how not to do it. Thereby, reinforcement learning is a rather complex area within machine learning, where the model learns from past behavior, and improves over time. This learning, because it is done by a machine, can be speed up to tusind of times faster than a regular human can learn. This is why we see models capable of beating humans in various tasks such as the board game go.

In neurospace, we distinguishes what AI and ML is, by how it learns and how it improves. In classification, regression or clustering models, algorithm is used for finding patterns, and learns through a training phase, whereas after in testing, we can see how well it has learned. When using reinforcement learning, the algorithm or machine learning model continues to learn based on past behavior.

Ethics and AI

Artificial Intelligence can be used for good and bad purposes like any other technology. There exist a fine line between how an algorithm can be used for good and bad. Artificial Intelligence in itself is not something we should fear. But in the hands of the wrong persons, it can be used for bad purposes.

As an example we can look into Microsoft’s Tay. The objective of Tay was to create a “teen girl” chatbot with the purpose of engagement and entertainment on twitter. Microsoft created a model that should learn through interaction with other Twitter Accounts. The engagement went far beyond just teenagers and different people tweeted it trying to see what Tay could do. Tay was designed to learn based on what it saw and within less than 24 hours, Tay changed from being a positive account to tweet “wildly inappropriate and reprehensible words and images”.

Now, is this Microsoft’s fault that this happened? Not necessary. The model created was designed to learn from us, which we, people, abused.

There exist no doubt that we should discuss ethics when we as humans work with AI. Additionally, we must be aware of the consequences. But it is not new to discuss ethics when machines perform tasks that can be performed by humans, or demand some kind of human intelligence. Isaac Asimov defined in 1940 following three laws for robotics:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm

- A robot must obey the orders given by human beings except where such orders would conflict with the first law

- A robot must protect its own existence as long as such protection does not conflict with the first or second laws

In neurospace, we believe these laws are suitable when we discuss the usage of artificial intelligence. However, there is no doubt that they should be extended to fit the current progress within AI.

It is similar to the trolley problem. When having self-driving cars, and a given accident cannot be avoided, who should the AI learn to protect? Additionally, when a driver choose to activate the autopilot, and the car is involved in an accident, then who is to blame? The driver or those who made the AI for the autopilot?

This is why AI are challenging industries such as insurance for autonomous cars with new players entering the market to compete on these specialized insurances with more established companies.

Chatbots such as Tay are interesting, because they do not have the possibility to physically harm a human being, as all they do are text or verbal speaking. However, one person could be harmed or offended by words, which also should be taken in to consideration when defining these standards for AI and Ethics.

We need to figure out responsibility and accountability when working with AI.

How should we start?

We need to make some guidelines for what is ethically right and wrong when talking about artificial intelligence. Ethics is a difficult topic, as it can become about individually values. What I believe to be ethically right might not be the same values you possess. Therefore, it is necessary that we set some ground rules for what is ethically right.

We also need to have a clearly definition for what artificial intelligence is, and what algorithms the rules apply to. The Ethical Advice in Denmark has suggested we distinguish between strong and weak Artificial Intelligence, first defined by John R. Searle. However, it is not clearly defined what characteristic makes AI strong or weak. Additionally, we need to be clear on when something is machine learning and when something is Artificial Intelligence. Predicting customer behavior, making customer segmentation, or making predictive maintenance on a machinery is not artificial intelligence. However, autonomous cars are, because they learn by trial and error in a way that is close to how human learns.

Biased dataset may create or uncover unethical behavior

Ethics in the purpose for building a machine learning model is only one part. However, supervised learning and unsupervised learning works by looking for patterns between the different decisions on an outcome. That means, the patterns of the dataset is going to be the foundation for decision making.

An example of unintended behavior is from Amazon. Amazon created a machine learning model for helping Human Resource in the process of recruiting the right person. The algorithm was introduced to past applications and the outcome (hired or not), and was thereby trained on Amazon’s past behavior. However, with time they realised that the algorithm had learned itself to sort out applications from women. This means that the model is discriminating against women. As a consequence Amazon chose to shut down the model. However, it was not all a waste. Because Amazon used their data and created a machine learning model, they realised that they have a problem in hiring women. Now is this because Amazon’s HR department discriminates women over men? Maybe, maybe not. The job advertisement for Amazon is often looking for programmers and software engineers, that often is men. It might be, that Amazon do not get that many applications for women, and thereby creates this bias in the model due to imbalanced dataset. It can also be that people within the recruitment process unconsciously applies a bias against women.

Another example is Beauty.AI which created a model for being the judge in a beauty contest. The model turned out to discriminate “people of color”. By investigating the cause they found that there was a bias in the dataset where there were more white people than people of color - again, an imbalanced dataset.

Altinget concludes that we still have issues making models that do not discrimminate the minorities in a field, like women in the IT-industry.

Therefore, we must make sure our dataset is not biased before we start automating tasks based on our past behavior. This calls for good analytical skills, an ethical mindset, and experimentation as some correlations can be hard to spot before a model is created.

With great power comes great responsibility

AI is very powerful and is likely the one technology that will change our lives the most in our lifetime. As humans working within the field of AI we need to be responsible. But is this enough? Is there a need for rules and guidance? In neurospace we believe that there is a need for a ruleset to ensure that AI is not used for evil and that private citizens and their data is well protected. People (the founders of the algorithm or those who use the algorithm) should according to the robot rights have moral obligations towards their machines (or AI), similar to human rights. It should be considered unethical to use AI for evil and should have consequences. Several organisations have been made over the past years such as OpenAI founded by Elon Musk, Peter Thiel, Reid Hoffman, Jessica Livingston, Greg Brockman, and Sam Altman. Additionally, Partnership on AI to benefit People and Society, includes people from companies like Facebook, Amazon, Microsoft, OpenAI, IBM, DeepMind, Apple, and Harvard University. Universal Guidelines on Artificial Intelligence (UGAI) additionally is an organisation with the purpose of promoting transparency and accountability of AI-models, so people retain control over the systems they create.

So how do we move to the next level? All these organisations appeal to safety and responsible work with artificial intelligence. But as it is today, we do not have any laws or regulations for the consequences of making artificial intelligence that could harm people. There should be a set of ground rules decided upon by organizations like the United Nations.

// Maria Hvid, Machine Learning Engineer @ neurospace

References

[1] Machine Learning for dummies (2018), IBM limited Edition. John Wiley & Sons, Inc, New Jersey.

[2] Yadav, N.: Yadav, A., Kumar, M. (2015) An introduction to Neural Network Methods for Differential Equations. XIII (114)